Hey guys, let’s start this article in a different way. One of my friend has a very good vision and he is very good in playing a game where we have to find differences between two images having a very few differences. While playing the game, one of my friends was drawing a picture same as another picture and another friend has to find which is real and which is fake. The artist is trying to copy the picture same as it is and trying to fill the gap so that there won’t be any difference between both the pictures but my talented friend always identify the painting drawn by him is fake one and not real.

One is trying to decrease the difference between real and fake while other is trying to increase it, may be increasing is not proper but trying to find the fake one even identifying minute difference.

So let’s start our article to use the same idea where one will generate and one will discriminate. Here we use two models where one is Generator and another one is Discriminator. The model which is a generator will try to generate the image as better as possible and discriminator will try to find the whether this is a real one or fake one.

There is one way to understand it with an application. Our generator will keep on preparing a clear deblurred image from a blurred image and our discriminator will try to find whether it is the same image or not by matching it with its clear and deblurred version. This is how we can train our models and then generate a deblurred image from a blurred image. There are many more applications of this kind of deep neural network model.

This model is called Generative Adversial Network which has the following core idea:

- GANs involve a game-theoretic approach between two models:

1. Generator (G): Learns to create data that looks similar to real data.

2. Discriminator (D): Learns to distinguish between real and generated (fake) data.

Adversial Process:

The Generator tries to fool the Discriminator by generating realistic data.

The Discriminator tries to correctly classify real vs. fake data.

Over time, both networks improve:

1. The Generator produces more realistic data.

2. The Discriminator becomes better at detecting fakes.

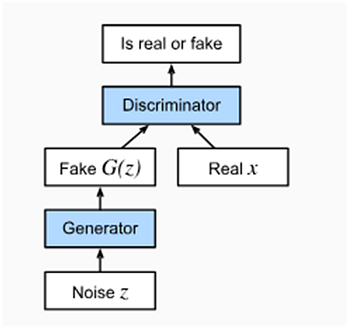

Now let’s see how the model looks

Here we can see that we are passing any noise to the generator just like a blank page and pass it to generator model which will generate a fake output and then we give the real and fake both data to the discriminator to identify if it can identify the fake one.

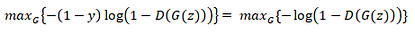

Let’s have a mathematical look for the model that how it works.

Let input be noize z which is passed to generator G.

Output given by generator is G(z).

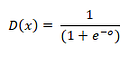

Inputs G(z) and x are passed to discriminator so output for discriminator D is D(x).

Suppose for the input, label y is 1 for real and 0 for fake.

Here we know that discriminator is a binary classifier that will be using cross entropy as its loss function. Now as a binary classifier, its output will be given by a sigmoid function. Suppose there is output w by fully connected layer of discriminator which is passed to sigmoid. Thus, we can denote output of discriminator as

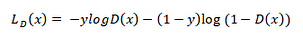

Now this will be passed to the cross-entropy loss function of binary classifier discriminator. So the loss will be

Discriminator will try to minimize this loss, on the other hand we have generator that will try to increase the loss of discriminator. This is how generator will learn to generate a perfect output.

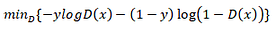

We train discriminator to minimize the loss

From a source of randomness (say a normal distribution N(0, 1)), generator will take a random parameter z which we call as latent variable. The generator G will generate G(z) as the output. The goal of generator is to fool the discriminator by classifying G(z) as true data so we want D(G(z)) ~= 1.

For a given discriminator, we update the parameters of the generator G to maximize the cross-entropy loss when y = 0, i.e.,

Suppose generator has performed the perfect job, then D(G(z)) ~=1, so the above loss will get near to 0. So we minimize the loss for generator as

Here we are giving G(z) to the discriminator and have label y = 1.

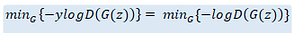

Overall, we can say that generator and discriminator seems to be having a minmax game, giving a comprehensive function

The expectation means “take the average value over all x sampled from the real data distribution. Here logD(x) is the log probability that D assigns to x being real.

z∼Noise: Draw samples from a random noise distribution. Here the average value for overall z sampled having log probability of correctly classifying G(z) as fake.

Let us work on an application of GAN where we will be generating deblurred image from blurred image. Here we will have a generator and a discriminator model.

Here the dataset will an image dataset having cleared images which we will make blur using Gaussian Blur. Next transform will be applied over clear and blur image where the image will be resized, converted to tensor and then normalize the values. Here we will be using binary cross entropy loss and adam optimizer.

# Load Dataset

train_dataset = BlurredCIFAR10(train=True, transform=transform, download=True)

test_dataset = BlurredCIFAR10(train=False, transform=transform, download=True)

train_loader = DataLoader(train_dataset, batch_size=64, shuffle=True)

test_loader = DataLoader(test_dataset, batch_size=64, shuffle=False)

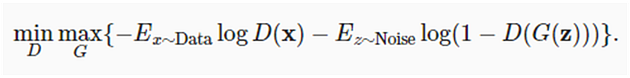

Let us see our both models

This is the generator model, a very simple but good to have start and learn.

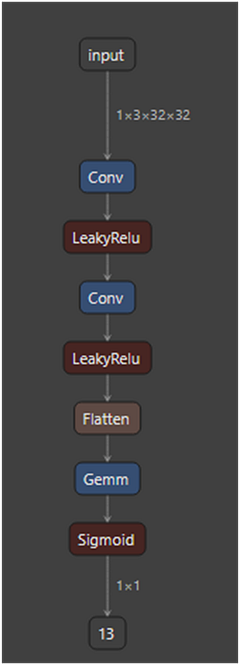

This is discriminator model that is used to differentiate between the real and fake one.

# Generator

class Generator(nn.Module):

def __init__(self):

super(Generator, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.Conv2d(64, 64, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.Conv2d(64, 3, kernel_size=3, stride=1, padding=1),

nn.Tanh()

)

def forward(self, x):

return self.model(x)

# Discriminator

class Discriminator(nn.Module):

def __init__(self):

super(Discriminator, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=3, stride=2, padding=1),

nn.LeakyReLU(0.2),

nn.Conv2d(64, 128, kernel_size=3, stride=2, padding=1),

nn.LeakyReLU(0.2),

nn.Flatten(),

nn.Linear(128 * 8 * 8, 1),

nn.Sigmoid()

)

def forward(self, x):

return self.model(x)

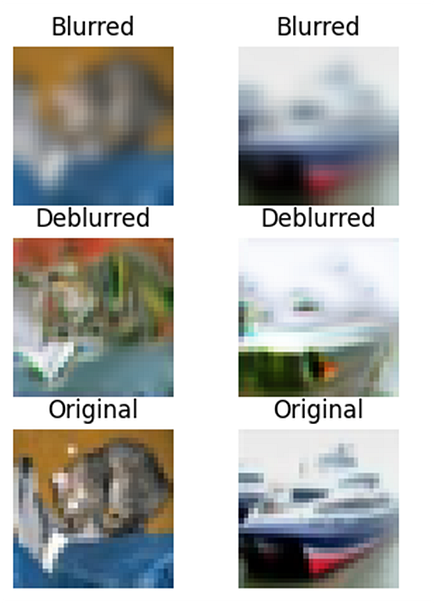

From these models, we have generated the outputs.

Here our model has tried to generate clear version of blurred version. Just to inform that the model is not trained a lot but still its output which is deblurred version somewhat resemble to the original one. Fine tuning or hyperparameter tuning can be used to make it perform more better.

That’s it in this article!!

— Akhil Soni