In my blog (Regularization of ML models), I have already discussed different methods of regularization for a linear model. The regularization of a model means setting the parameters of the model in such a way that the cost function which is the performance measure of the model should be as minimal as possible so that it can predict perfectly for the given data.

There are different ways like ridge regression, lasso regression and elastic net which can be used to regularize a model with different features, functions, applications and conditions to be used with a model and data in different situations.

During training of a model, first we used to fit the training data and then look for the testing data and validate the predicted data with the correct data and get the performance measure so as to know whether our model is performing better for the given data. We also check that whether the model is properly trained or not. During training it can be possible that in some epochs, the model has performed better while it has performed some lower in other times. We want the best model when the model is trained perfectly for the prediction of the correct data with low cost function as minimal as possible with the training and the validation error will also be low.

To perform this process, we continue to train the model with iterative learning algorithms and stop the training as soon as the validation error reaches its minimum. This is called early stopping.

Suppose we have a model as we are continuously training the model with the training data. We will keep calculating performance measure for the model. As soon as the validation error starts rising, the point at which it will start to rise again after getting the mimimum value, the model will be saved on that point and training will be stopped. This will be the model which has given the lowest validation error and has been trained so well. After that point there will be no need to train the model again or any more.

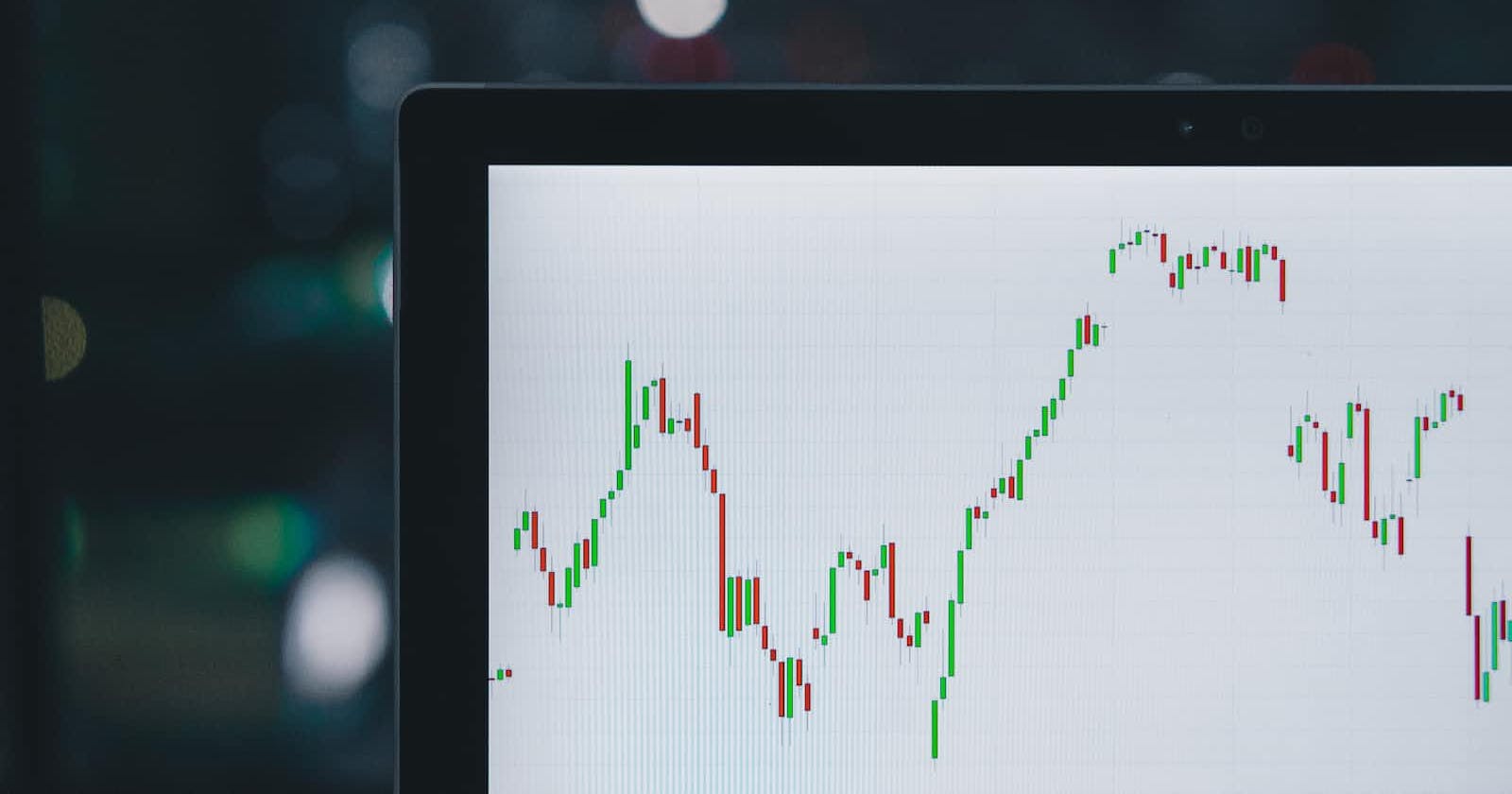

This is graph of a regression model being trained by gradient descent. With the increasing epochs, the model is learning and with the decrease in the error on the training set, the error in validation set is also decreasing. However the decrease in error continues for a while, after which it starts again to rise. We need to get that trained model which gives the minimum error of all after which the model starts overfitting with training it with other datasets.

This technique can be applied with any iterative algorithm like Gradient Descent such as Stochastic Gradient Descent for which sklearn has provided SGDRegressor.

from sklearn.base import clone

from sklearn.linear_model import SGDRegressor

X_train, X_val, y_train, y_val = train_test_split(X, y, test_size=0.2, random_state=42)

sgd_reg = SGDRegressor(max_iter=1000, warm_start=True, learning_rate="constant", eta0=0.0005)

minimum_val_error = float("inf")

best_epoch = None

best_model = None

for epoch in range(1000):

sgd_reg.fit(X_train_poly_scaled, y_train.ravel())

y_val_predict = sgd_reg.predict(X_val_poly_scaled)

val_error = mean_squared_error(y_val, y_val_predict)

if val_error < minimum_val_error:

minimum_val_error = val_error

best_epoch = epoch

best_model = clone(sgd_reg)

Here the best model provides me that trained model which gives minimum error for the SGDRegressor in validation set.

Thank you....

Akhil Soni

You can connect with me on - Linkedin